SUPPLY CHAIN ANALYTICS

The concept of Supply Chain comes from the network of exchangeable relationships, which must exist and execute, for the creation & supply of a product /service to its final customer. The steps involved in these 2 type of Supply Chain are presented in the following figures: -

Product

Service

We have often experienced, in our day to day life, how the vendors in context to vegetables, fruits, flowers, etc. have their market in India. We, as consumers, often go to these road side vendors straight to get the said items, expecting to get the best prices and the freshest of those products. But what we generally don’t indulge into is the path those items take from the producers to the consumers. For example, ‘VeggieKart’, a hyperlocal player operating in the city of Bhubaneswar (India), is a consumer and farmer beneficial initiative that uses its e-commerce platform to sell vegetables and fruits. They thrive on providing fresh quality farm produce, through their value-adding supply chain, while giving a win-win return to the farmers and the consumers. The role of this initiative is not only to create a symbiotic relationship between the consumers and farmers but also to remove the middlemen from the value chain. The involvement of Wholesalers and Retailers pushes the time period for delivery of the products by a great extent and to explain the same I would like to provide the example of ’TheBouqs’.

’The Bouqs’ Company is a cut-to-order, farm-to-table, eco-friendly flower retailer, delivering straight from farms. The company knows how to save money at each of the phases in the supply chain from farms to flower recipients. Typically, flowers go from farm to wholesale, wholesale to retail and retail to consumer, wasting about 17 days of the lifespan of a commodity that only lasts 21 days. The Bouqs is aiming to change the flower industry for the better with farm-to-door flowers that are only cut once a customer places an order and arrive on day 4 rather than on day 17. Supply chain analytics allows The Bouqs to constantly analyze and use historical sales from the farms’ data, as an input into their systems, to determine what’s available for sale and link to their estimate of what they think is going to sell in terms of volume, of any given type of flower, in any given month to ensure that their farm network can satisfy that demand./p>

Supply Chain Analytics is the streamlining of a business’ supply-side activities to maximize customer value and to gain a competitive advantage in the market place. It represents the effort by the suppliers to develop and implement supply chains that are as efficient and economic as possible. The potential benefits of Supply Chain Analytics are the following: -

1. Using historical data to feed predictive models that support more informed decisions.

2. Identify hidden inefficiencies to capture greater cost savings

3. Using Risk Modeling to conduct “pre-mortems†around significant investments and decisions

4. Predicting Consumer and Pricing Analytics to provide the whole profitability picture.

Let’s think of Supply Chain Analytics in 3 forms – (a) Descriptive Analytics which says “Where am I todayâ€, (b) Predictive Analytics which says “With my current trajectory, where will I be headed tomorrowâ€, (c) Prescriptive Analytics which says “Where should I beâ€. 3

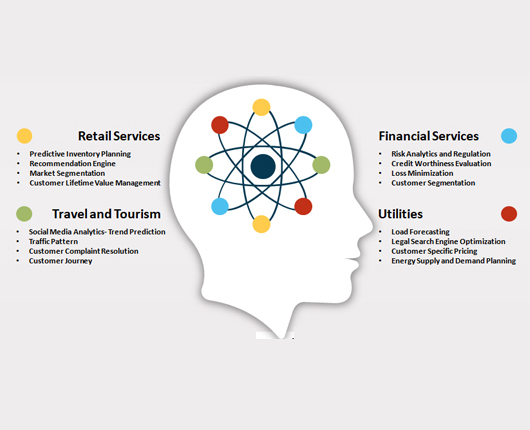

To get insight into product data, a company can use historical data with present day factors which affect the production and sale of the product, such as weather, demographic, economic and social media, to better analyze and predict the sale of the product. From Supply Chain Analytics point of view, building predictive models, based on the available data, with the usage of machine learning algorithms, estimates the time period for the supply of a product from the producer to the customer, including the middlemen. Talking about Predictive Analysis 4 on the factory floor, which is the first step of the supply chain ladder, any delay in this step will be an obvious impact on the supply chain performance. To tackle this kind of situation, different types of sensors are used on critical, capital intensive production machinery to detect breakdowns before they occur; this sensor data is analyzed to prepare predictive models for different failure conditions. 5 Thus, Predictive Analytics helps in forecasting the demand based on the historical data and external factors related to shipment of products by providing visibility in terms of assets and operations for the company.

For a logistic company like ups (United Parcel Service) which is so fast paced and is well known for its unparalleled shipment and delivery system, the use of supply chain analytics along with sensor data, which they use in their delivery mechanisms such as trucks provided with wireless infrastructure known as Delivery Information Acquisition Device (DIAD), this comes under the aspect of descriptive analytics, provides them real time data starting from the time the driver loads the shipment to different time intervals, recording every scheduled and unscheduled stops on the way to the delivery address, to efficiently analyze and predict the delivery time of the shipment- this is referred to as Prescriptive Analytics.The sheer presence of big data helps in about ‘how’ to analyze the work they do rather than ‘what’ to do with the data, that’s where Analytics comes in.

From an Analytics perspective, they built a model which would predict where every package was at every moment of the day, and where it needs to go and why, then we could just flip a bit and change where a package is headed tomorrow and that helped them become more efficient as the drivers didn’t have to start the day with an empty DIAD, it had all the information they needed to have, so basically DIAD rather than being an acquisition device became an assistant for them so as to guide them during the travel saving a lot of fuel and time expenses in the meanwhile.This is where the ORION (On Road Integrated Optimization and Navigation) system comes in. ORION systems helped them to reduce 85 million miles driven in a year which ensured faster delivery which is 8.5 million gallons of fuel less consumed and on the environment aspect 85000 metric tons of carbon dioxide not emitted. 6

With the ORION system, ‘ups’ has what they call “all services on boardâ€, where they assign one driver, one vehicle, one service area and one facility but having 2 different services per vehicle namely premium service and deferred service which helps them manage the delivery service on a priority basis.The DIAD using the Geospatial technology helps in reorganizing the best route according to the delivery schedule, for the driver. Building these predictive models on arrival times based on as much historical data available as well as third party data sources such as traffic and weather, depending on machine learning predicts the estimatedtime of arrival and the same is notified to the customer by the DIAD itself. Putting that in perspective, it’s amazing to see how the data and analytics is being used to make the life of the driver, basically the Supply Chain Management, more organized.

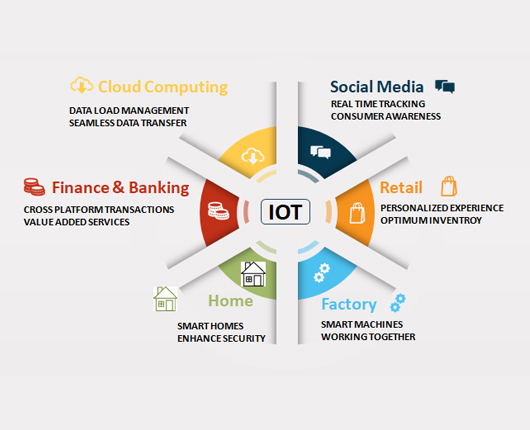

With better connection of the manufacturing unit, logistics, warehousing and supply chain processes, there’s opportunity for manufacturers to cut costs and also face the business challenges. The use of IoT (Internet of Things) through sensor data and statistical forecasting algorithms used with real time data boosts up the opportunity of the producers to maintain stability in their delivery system. This is ‘Supply Chain Analytics’.

Another great example I would like to refer is SAP – IBP (Integrated Business Planning) which provides functionality for sales and operations, demand, inventory, supply and response planning -- and SAP Supply Chain Control Tower can help supply chain planners accurately and collaboratively develop sales, inventory and operations plans.IBP’s Supply Chain Control Tower, which is available only in the cloud, combines data from several systems, including SAP ERP, non-SAP systems, and third-party systems, and uses the data in conjunction with signals from IoT devices in manufacturing, logistics and related information networks. It acts as a supply chain collaboration hub for all stakeholders to simulate, visualize, analyze and predict the information and possible outcomes they need to know to resolve issues and remediate risks, as well as to improve business performance. With Supply Chain Control Tower, cause-and-effect and what-if simulations help planners gain insight into how a disruption to manufacturing, logistics, transportation or supply chain operations will affect business. These supply chain analytics, that enable planners to ensure corrective and preventive measures, are put in place to eliminate or minimize factors leading to supply chain nightmares.